Real-TIme Video Anonymizer on FPGA

Abstract

Abstract

Real-Time Video Anonymizer Using FPGA

A real-time FPGA-based system that detects and blurs faces in video streams to ensure privacy with minimal latency.

Aim

- Design and implement a real-time video anonymization system using FPGA

- Achieve face detection and blurring with minimal latency (target < 30ms)

- Process standard video resolution (720p) at 30 frames per second

- Develop a modular and scalable architecture

- Implement the system using Verilog HDL

Introduction

The increasing concerns over privacy and surveillance have created a need for real-time video anonymization solutions. This project implements a real-time video anonymization system using FPGA technology, which can automatically detect and blur faces or other sensitive objects in video streams with minimal latency. The hardware-based approach using FPGA ensures faster processing compared to software-based solutions.

Literature Survey and Technologies Used

Literature Survey

- Real-Time Video Anonymizer by Sean Ogden and Feiran Chen

Implemented an FPGA-based face anonymization system that uses modular design principles for efficient detection and blurring. It demonstrated that real-time anonymization is achievable on embedded hardware platforms.

- Real-Time Face Detection and Tracking by Thu-Thao Nguyen (Cornell University, 2012)

Developed a real-time face detection and tracking system using embedded hardware and camera interfaces. The project illustrated practical challenges in implementing detection pipelines and provided reference methods for reliable tracking.

Technologies Used

- Verilog HDL: Used for designing and implementing the custom hardware logic blocks required for processing video frames and the face blurring algorithm

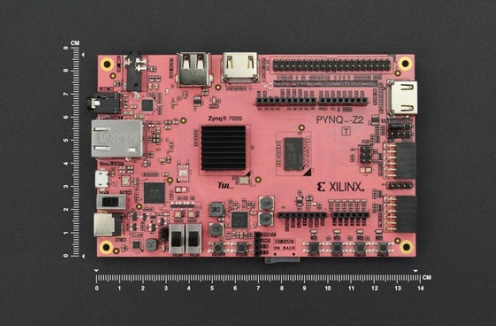

- PYNQ-Z2

-

- Xilinx Vivado: A toolchain employed for simulation, synthesis, and programming the FPGA.

- Webcam + HDMI Interface: Interfaces used for capturing real-time video and displaying the output.

- Jupyter Notebook: Used for real-time audio processing and anonymization.

Methodology

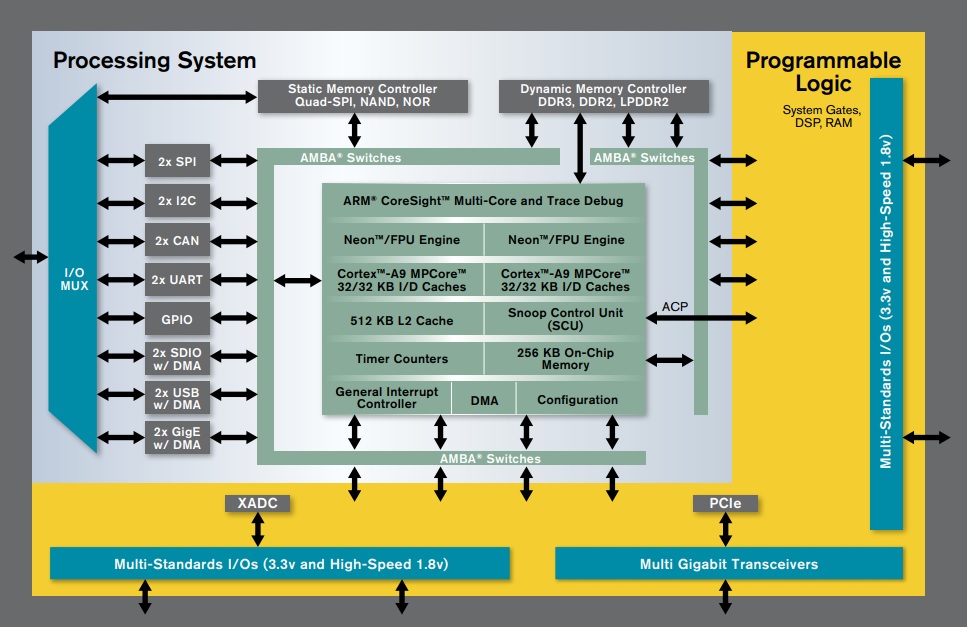

This project implements a real-time anonymization pipeline using the Zynq-7000 SoC on the PYNQ-Z2 board, which combines a dual-core ARM Cortex-A9 processor (PS) with a Xilinx 7-series FPGA fabric (PL). This heterogeneous architecture allows for high-level control and visualization via the PS, while leveraging the speed and parallelism of custom hardware in the PL for efficient processing.

The Processing System (PS) runs Linux and manages high-level tasks such as camera interfacing, DMA setup, data transfer, and display.

The Programmable Logic (PL) is used for high-speed, frame-level image processing tasks like skin detection and blurring, implemented as a custom Verilog IP core.

Together, the PS and PL collaborate through AXI interfaces and DMA engines, allowing video frames and control signals to be efficiently shared across the two domains.

System Architecture and Data Flow

- The anonymization pipeline follows:

- The PS captures webcam input using OpenCV in a Jupyter Notebook.

- Captured frames are sent to the PL via DMA for processing.

- Custom IP Core:

- Receives the frame.

- Detects skin regions.

- Applies blurring within detected regions.

- The anonymized frame is sent back via DMA.

- The PS displays the frame using OpenCV.

Skin Detection and Blurring Algorithm

Implemented fully in Verilog inside the custom PL IP:

Skin Detectiion

- Each pixel is examined in RGB.

- A normalized skin detection algorithm is used, after referring to many research papers on image processing. After normalizing the R,G,B values of the pixels, we check if they are in the following range:

- (r_norm >= 35 && r_norm <= 45) &&

- (g_norm >= 28 && g_norm <= 36) &&

- (b_norm >= 23 && b_norm <= 31);

- Detected region is recorded in a buffer.

Blurring

Algorithm 1: Using Horizontal buffers

- Skin is detected using the normalized skin detection algorithm given above.

- One horizontal buffer is used to slide through the image and store the classifications as skin or not skin.

- The other horizontal buffer is used to store the pixels in the image.

- The average of the pixels in the second buffer is continuously computed.

- If the number of skin pixels in the first buffer is greater than a particular value, then the average of the pixels in the second buffer is outputted(BLUR) otherwise the pixel is outputted as it is.

Algorithm 2: Using Bounding Box

- After Skin Detection the segmented Image is Stored in a buffer with pixels categorised as skin and non skin

- We check that buffer vertically for continuous skin pixels, if the count exceeds 65 (for a 1080x720 image) then it is considered as a face

- After that the center of that continuous patch is calculated and a rectangular blur frame is applied around that center

Audio anonymization

- Performed entirely on the Processing System (PS) using the PYNQ base overlay. A 25 Hz cosine wave was used to modulate incoming audio in real time:

- 10 ms chunks were recorded from the onboard microphone (480 samples at 48 kHz).

- A phase-continuous 25 Hz cosine wave was generated for each chunk.

- The input audio was multiplied with the cosine wave, distorting the speech. The modulated audio was played back.

Results

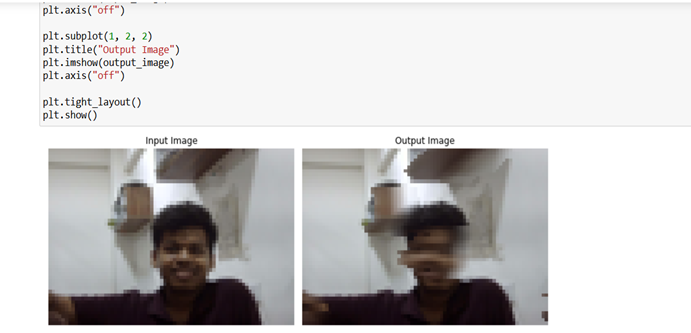

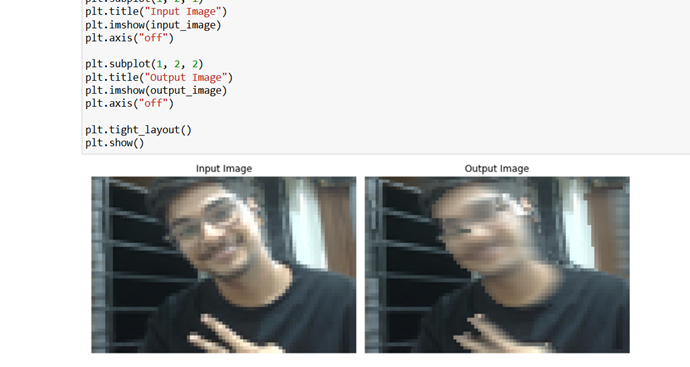

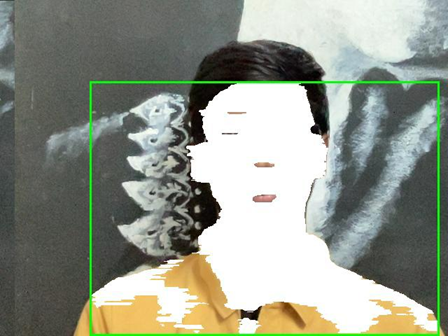

We have obtained great results in both simulation and Hardware implementation in FPGA, Some of the images are attached below:

Some images with their ANONYMIZED VERSION ARE AS FOLLOWS:

In simulation:

_________________________________________________________________________________________________________________________

In FPGA:

_________________________________________________________________________________________________________________________

Some other attempts:

Conclusions and Future Scope

In this project, we successfully implemented a skin-aware image blurring algorithm using Verilog HDL and deployed it both in simulation and on the PYNQ Z2 FPGA board using two separate algorithms.

Simulation results and live FPGA tests with webcam input confirmed that the system correctly identifies and selectively blurs skin regions, achieving the intended effect in real time. The design demonstrates efficient use of hardware buffers and DMA-compatible streaming interfaces, making it well-suited for embedded computer vision tasks such as face anonymization or privacy-aware video processing.

This work lays a strong foundation for more advanced operations like spatial (2D) blurring, multi-region tracking, or even AI-enhanced detection—all within a hardware-accelerated pipeline.

References

https://www.sciencedirect.com/science/article/pii/S1877050915018918?ref=pdf_download&fr=RR-2&rr=92c35540cbe5c1b3

https://www.youtube.com/playlist?list=PLXHMvqUANAFOviU0J8HSp0E91lLJInzX1

Report Information

Team Members

Team Members

Report Details

Created: April 7, 2025, 12:25 a.m.

Approved by: Omkar Patil [Diode]

Approval date: April 7, 2025, 10:37 p.m.

Report Details

Created: April 7, 2025, 12:25 a.m.

Approved by: Omkar Patil [Diode]

Approval date: April 7, 2025, 10:37 p.m.